Alternative grading in algorithms

Reflections on a first attempt at alternative grading

Today we have a guest post from Kevin Sun. Kevin most recently taught computer science (CS) at Elon University. He received his Ph.D. in CS from Duke University and B.S. in CS and mathematics from Rutgers University. He has primarily taught algorithms, and he has written course notes that can be found on his website. He also writes a newsletter called Teaching CS. In Fall 2023, he will be joining UNC-Chapel Hill as a teaching assistant professor.

If you’d like to contribute a guest post to this blog, fill out our guest post information form and let us know what you’re thinking about writing!

Last semester, I used a semi-alternative grading scheme based on specifications and standards-based grading. It was a small, but mostly positive, step away from traditional grading. In this post, I’ll discuss the details, my thoughts, and takeaways.

Background & Description

The course was Algorithm Analysis, an upper-level course required for computer science (CS) majors, at Elon University. Elon is a mid-sized, private, primarily undergraduate university, with an acceptance rate of 74% (for the class of 2026), an average class size of 20, and a cap on all class sizes of 33. My class had 27 students, most of whom were CS majors. Based on my surveys and conversations with them, most students were interested in a career in software development, IT, or an adjacent field.

One general challenge of teaching algorithms is that its connection with those fields is not apparent: software development focuses on code, tools, and large-scale projects, while the field of algorithms focuses on mathematical proofs, abstractions, and self-contained problems. An ongoing goal of mine is to reconcile this discrepancy while still teaching students the fundamental ideas of theoretical computer science.

In the fall semester, I had used a traditional grading scheme: tests, homework, and (some) attendance were graded as percentages, and the final grade was a weighted average of these percentages. But I felt that this approach had two main issues. One was that cramming for the quiz, and promptly forgetting much of the content afterward, seemed common. The other was that the scores felt too arbitrary. For example, the difference between a 6/10 and 5/10 was not clear, even though the former is passing while the latter is not.

Fortunately, I had read about various alternative grading systems (including many posts on this blog!) that addressed these concerns. Inspired by them, I made a few changes in the spring:

Tests only: Students took six in-class, timed assessments: five quizzes and a final exam. Each quiz was worth 12 points and the final exam was worth 40 points, for a total of 100 points. Nothing else (e.g., homework, attendance) was formally assigned or graded.

Binary scoring: Every problem on every test was worth either 1 or 2 points, depending on whether the problem was lower or higher (respectively) on Bloom’s taxonomy. There was no partial credit for any problem, so if a problem was worth 1 point, each student received either 0 or 1 points, and if it was worth 2 points, each student received either 0 or 2 points.

Quiz replacement: The final exam had five parts; each part corresponded to (and resembled) a quiz. If a student’s score on Part X was higher than their score on Quiz X (scaled accordingly), then their score on Quiz X was replaced by their score on Part X. This applied for every value of X from 1 through 5. (So it was possible for a student to skip every quiz and still ace the class.)

Sum-based thresholds: A table in the syllabus translated the sum (rather than average) of points received into a letter grade:

If a student received at least 80 points then they got an A, 70 points was a B, and so on. I deliberately set these thresholds somewhat low to compensate for the absence of partial credit and the uncertainty of using a new grading scheme.

In summary: grades were based on six in-class, timed assessments; every problem on every assessment was worth either 1 or 2 points with no partial credit; the final exam was a second chance at every quiz; and letter grades were based on the number of accumulated points.

Each change was based on something I’d read about. Binary scoring and sum-based thresholds are elements of specifications grading. The quiz replacement policy is a nod to standards-based assessment and is an example of reattempts without penalty.

A typical quiz had 6-10 problems worth 1 point each, and 2-3 problems worth 2 points each. For the 1-point problems, a student received the point if their answer was correct; a clear boundary separated “correct” and “not correct.” Here are a few examples:

True or False? If an undirected graph has n vertices and n-1 edges, where n is a positive integer, then the graph must be a tree.

Draw an undirected graph G containing exactly 4 vertices and 4 edges. The edges should be labeled with weights, and G should have exactly two MSTs. (The edge weights should not be distinct.)

Give a “yes” instance of Vertex Cover. The graph should have at least 3 vertices and at least 2 edges.

The 2-point problems required a longer computation and/or more ingenuity. To receive the points, a student’s answer needed to demonstrate significant progress toward a completely correct solution. For example, if the answer consisted of a table containing 6 integers (as in Problem 1 below), then the student received credit only if all 6 values were correct. Some 2-point problems are below:

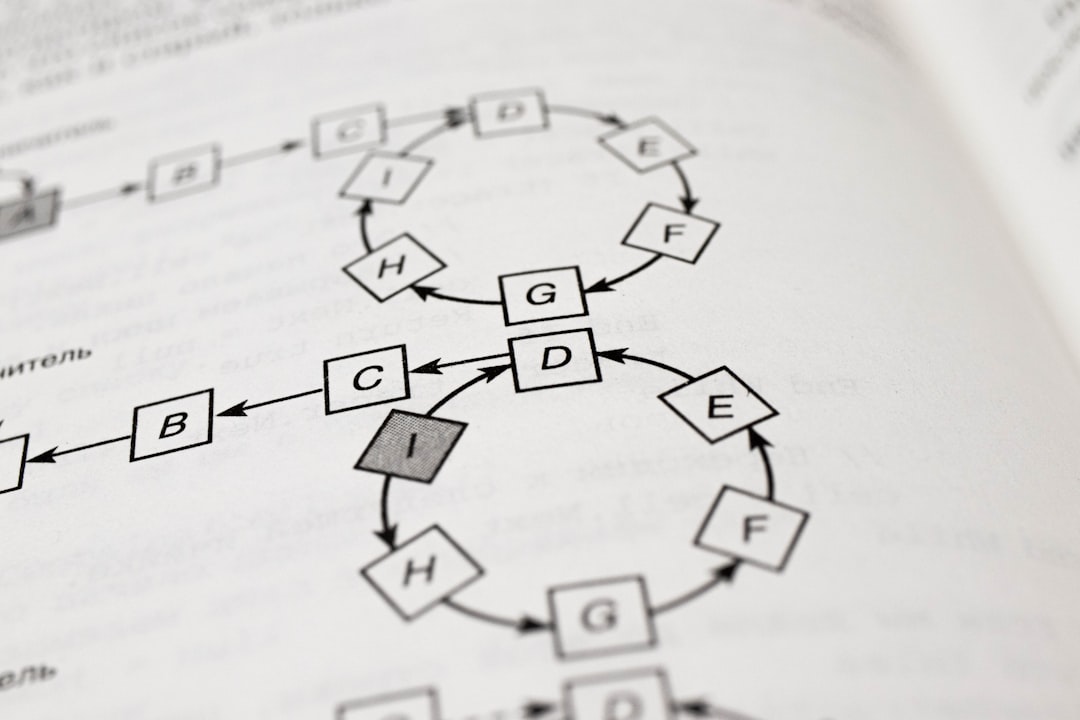

Suppose we run the BFS algorithm on [the graph above], starting at vertex 0, and we break ties in favor of the smallest number (e.g., if 2 and 5 are both options, pick 2 first). But suppose that we stop immediately before popping a third vertex from the queue. List the values of d in order at this point, from d[0] through d[5].

Draw an instance of the MST problem (including edge lengths) such that Kruskal’s and Prim’s algorithms select the same set of edges in the same order. You should specify the starting vertex for Prim’s, and your graph should have exactly 4 vertices. Briefly explain your answer.

Same as Problem 2, but replace “the same order” with “different orders”. Briefly explain your answer.

Reflection

The obvious benefit of a test-only policy was a reduction in grading and administrative tasks (most notably homework), which created more time for lecture preparation, quiz writing, and office hours. I also liked the simplicity of the system, which helped students quickly catch on to it. My impression is that because they were accustomed to in-class, timed tests, they accepted them as a standard method of assessment. They didn’t rave about the test-only policy (at least not in front of me), but I also didn’t receive complaints. In fact, they seemed less anxious about the final exam than students I’d seen in the past. I think the key reason was that they knew that the exam would resemble the quizzes and that it could only increase their grade (possibly by a lot) due to the sum-based thresholds and quiz replacement policy.

Relative to students in the past, students seemed to make fewer attempts at the open-ended problems on the assessments. My hypothesis is that binary scoring, together with the fact that every problem was worth at most 2 points, reduced their impulse to scribble something down in the hopes of receiving partial credit. This could be interpreted both positively (students had clearer intentions and goals) and negatively (they shied away from challenges)1.

The cost of a test-only policy was that students might have engaged with the course material less deeply since in-class assessments can only assess so much. Assigning homework could have helped, but unfortunately, based on my experience, I was concerned: homework that’s too hard demoralizes students and nudges them towards shortcuts, and homework that’s too easy just feels like busywork. I wasn’t alone — there’s quite a bit of anti-homework discourse these days (though most of the focus is on K-12). But after the semester started, I read these other posts that discuss cheating, which make me feel more optimistic about homework. And I’ve realized that there are other ways to assess learning, including portfolios, videos, group papers, and a class journal. Given the limitations of traditional tests, I’m interested in exploring these avenues in the future.

Binary scoring was faster and more consistent than traditional scoring since there’s no partial credit. In the past, for a problem worth 12 points, I’d ponder whether a student should receive, say, 9 or 10. But binary scoring removed this uncertainty: 9/12 and 10/12 both pass the bar for credit (though perhaps not for excellence). For problems whose solutions were more open-ended (about 20-30% of all problems), the passing boundary was less clear; I graded these using more detailed rubrics (e.g., “at most one mistakenly labeled edge” rather than “correct”).

The combination of binary scoring and sum-based thresholds are major components of specifications grading, which I find compelling. They especially make sense when the syllabus lays out the learning objectives of the course since passing a threshold would represent meeting an objective. My syllabus had high-level objectives (e.g., “Analyze the correctness and runtime complexity of a given algorithm”) but not any detailed, low-level ones. Unfortunately, I think this caused the students to forget about the learning objectives, which made the course appear less structured. To rectify this, I could link problems to objectives more explicitly with a system like Joshua Bowman’s (more on that at the end). At the same time, I’m aware of the risk that students can become overly focused on satisfying learning objectives, which sometimes miss the big picture.

Finally, even though I didn’t grade attendance, it was fairly consistent. Out of the 27 students, I’d guess that at least 80% showed up to at least 80% of the lectures.

Takeaways

I expect to teach classes larger than the ones I taught at Elon, so moving forward, I’m interested in ideas that scale. With that in mind, the change I’d most like to keep is binary scoring (though I might use a 3- or 4-point scale for the more open-ended problems). Reducing time spent on determining objective-looking numbers enables faster and more detailed feedback, and having fewer possible outcomes yields greater consistency, especially if there are multiple graders with different inclinations.

Another idea that scales well is baking grade replacements and/or retakes into the syllabus. Reattempts are essential for growth, but there is a risk that having automatic replacements causes some students to blow off the course until the last week, sabotaging both their learning and their grade. (The same could be said about homework and attendance.) But, perhaps surprisingly, this wasn’t a problem in Algorithm Analysis — it seems that students in upper-level courses generally have more consistent studying habits, and there’s a selection effect where students further along in the CS major are generally interested in the field.

Finally, as mentioned earlier, I’m considering explicitly labeling every problem according to both its position in Bloom’s taxonomy, as well as the corresponding learning objective. As mentioned earlier, one example of this is Joshua Bowman’s calculus class — tasks are divided into three categories called “Core,” “Auxiliary,” and “Modeling,” and each task has a description (e.g., one Auxiliary task is to “Estimate a derivative by approximation using a table or chart”). By referring to their list of completed tasks (instead of a vague percentage value like “77%”), students can precisely track their progress and understanding to guide their improvement.

My first foray into alternative grading was good but not perfect. It gave me firsthand experience with various concepts involved in alternative grading, including the role of learning objectives, various methods of assessment, retakes, points, etc. In the end, I hope to continue to grow as an educator to promote learning and growth among my students.

Unfortunately, I don’t have much data or student feedback to check against my observations — I plan to collect more from future classes.

In hindsight, what do think of your final grade thresholds? Would you keep the same thresholds next time?

Thanks for sharing your process Kevin. You spelled it out very clearly here. Just curious about the reaction from students… were they on board?