Alternatively graded discrete math in hyperdrive: A reflection

A postmortem on specifications grading in a fast and wild asynchronous online course

Take a standard, three-credit course that normally meets 150 minutes per week during a 15-week semester. Then, run it on a six-week schedule so that the course is 2.5 times faster. And put it online so there is no physical colocation. And make it asynchronous, so there is no temporal colocation either. And, while you're at it, design the assessment and grading around specifications grading. And do all this in such a way that the exponentially growing risk of the misuse of generative AI is mitigated.

What kind of person in their right mind would agree to teach a class like this? Well, me. (Which I'm aware puts "right mind" up for debate.) This class was my reality during our "Spring" term which ran from May through mid-June. I have mentioned this class a couple of times (first here, and then at length here). It's my Discrete Structures 1 class that I teach regularly, but with the above modifications for Spring term. I said in the last post that once the term was over, I'd be back to give a postmortem. So, here it is.

The setup

I’m first going to quickly get into some of the weeds of the design of this course to give some context and terminology. This is a little boring but bear with me.

This course is the first in a two-semester sequence on the fundamental mathematical tools and concepts needed for Computer Science and CS-adjacent fields of study (like Cybersecurity). Here is the catalog description. I teach this course almost every semester, but never in the summer. This version of the course, again, was asynchronously online during our six-week "Spring" term (May-June). After some initial concern that the course wouldn't meet minimum enrollment levels, I ended up with nine students, mostly Computer Science or Cybersecurity majors at the end of their first or second year, plus one staff member auditing the class. Here is my syllabus for the version of the class I'm writing about today.

Although I teach this class often, this version of it was so different that it almost felt like a different course. In those situations I always like to start a design process with what’s familiar to me, and I typically think of classes being designed on a 3D axis: basic skills, applications of basics, and engagement. No matter what the course eventually looks like, all student activity — as well as assessment and grades — is a linear combination of those three orthogonal components.

To provide structure for the course (always super important but especially so in an asynchronous online course), I split the course into six weekly modules: Computer Arithmetic, Logic, Recursion and Induction, Sets and Functions, Combinatorics Part 1, and Combinatorics Part 2. The activities in the course were aligned with clearly defined standards in the form of 15 Learning Targets, which you can see here and which describe the essential basic skills students learn.1

Each weekly module had the same set of assignments:

Two Concept Checks which were short quizzes on basic ideas, administered and graded through our LMS (Blackboard);

Two practice sets, divided into Level 1 (basic) and Level 2 (less basic). Here are the two sets from Week 3. Level 1 practice consisted of 10 problems and each problem assigned randomly to students who put their work on a FigJam board for others to see.

Two video reflections, one at the beginning and one at the end of each week. (Students were given short prompts to reply to, and left to their own devices to make a video responding to the prompt and then uploading to the LMS.)

A Problem Set with more advanced problems to tackle. Here is the problem set from Week 3.

Checkpoints for Learning Targets, which are quizzes testing mastery of the 15 Learning Targets.2

The Concept Checks, video reflections, and Level 1 practice (that’s five things each week) are how I assessed the “engagement” axis. These were graded Complete or Incomplete on the basis of completeness and effort only. Being completeness-and-effort items, these did not have the opportunity for retries, but they did serve as useful starting points for feedback.

Level 2 practice and Problem Sets were how I assessed the “applications of the basics” axis. These were graded Success or Retry on the basis of completeness, effort, and overall correctness. Here is a document that outlines the standards for “Success”-ful work. I allowed one retry total for each Level 2 practice set, and up to two retries per week for Problem Sets.

Learning Targets give all the basic skills, and these were assessed through weekly Checkpoints. These were similar to how I did them in the in-person version of the class except, being asynchronous online, I couldn't do in class timed testing. Instead, I set up individual Blackboard quizzes for each Learning Target, with each week introducing new versions of previous Learning Targets and new Checkpoints for any new Learning Targets for the week. These quizzes were mostly "essay" questions so students could show work, which was the main basis for grading. These were graded Success or Retry and if a student got a Retry, they could try again the next week.

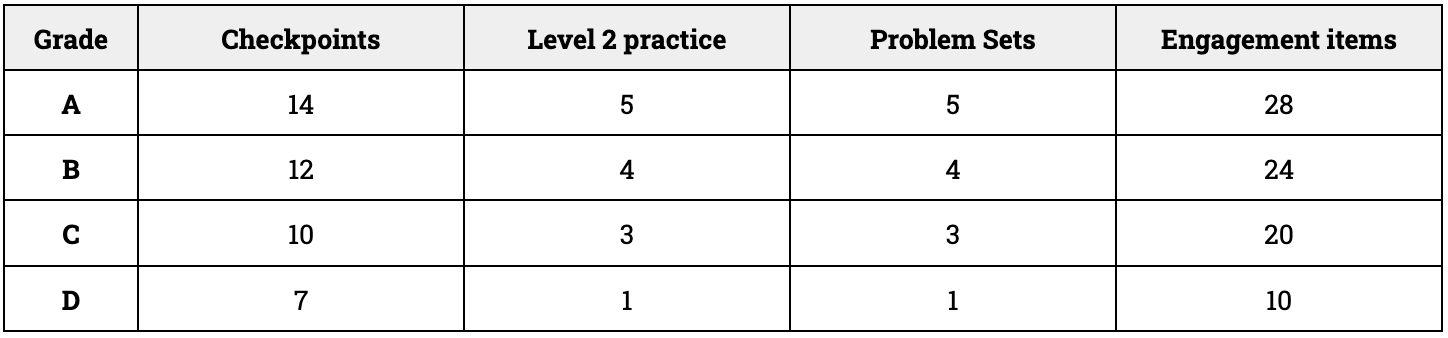

Course grades were tabulated according to the number of Success or Complete marks earned in each of the main assignments:

There are rules in the syllabus for earning plus and minus grades as well, which you can read about if you like.

What students did, and what I did

With the assessment and grading structure explained, here is what students did and when they did it. Each week had the same schedule:

Sunday: Content folder for the week opens, each of which contained a “home page” with a list of articles from the course wiki to read and videos to watch.

Monday: Students finish the week’s videos and readings. Then, they complete the first Concept Check and the first video reflection.

Tuesday: Students complete their assigned Level 1 practice exercise and put work on the FigJam board.

Wednesday: Nothing is due on Wednesday. This day is for getting started on the more advanced assignments due later in the week, watching my worked example walkthrough videos (below), and asking questions.

Thursday: Students turn in Level 2 practice and complete the second Concept Check.

Friday: Students complete the second video reflection.

Saturday: Students turn in the Problem Set for the week.

We also had one online drop-in hour on Zoom, each week at noon on Thursdays. Students could also schedule an appointment with me for 1:1 meetings.

Behind the scenes, I had a lot of work on my hands. I blocked off 9:00am to 12:00pm Monday through Friday to work on the course and usually used every moment of that time block for the entire six weeks. Like students, I also had a weekly schedule:

Monday: "Grading Day" -- Problem Sets, Checkpoints, revisions, and other stuff turned in over the weekend.

Tuesday: Each week I made a 10-minute video going over the questions in the first Concept Check. Here's the video for week 3. I'd also go through the FigJam board and look through student work on the Level 1 practice and leave feedback on the board if needed.

Wednesday: Each week I made a significantly longer video with 4-6 sample problems and a complete walkthrough of their solutions. These videos often ran for 35 minutes to an hour and would take about twice that much time to create. Here's the walkthrough video for week 3.

Thursday: Catching up on grading items submitted earlier in the week, especially Checkpoints, and prepping materials for the next week. Also the drop-in hour. I also made a walkthrough video for the second Concept Check and set that up to post on Friday morning.

Friday: Each Friday I would make personalized feedback videos for each student in the class. These ran anywhere from 3 to 5 minutes (longer, if there was more to say). I used Loom to make these. I'd typically pull the student's Blackboard gradebook page, give an off-the-cuff overview of how their work went this week, and give advice, encouragement, admonition, or whatever was needed.

That’s a fairly complete look at how the course was set up and what went on during the week. Now to get to the heart of it: How did this all work?

What worked well

The "high frequency/low intensity" approach to the assignment schedule. I was worried about the busy-ness of the weekly schedule at first. But I stuck with it, because it makes sense given the accelerated time schedule: If this were a 15-week course, we'd be doing one concept check per week, one problem set every two weeks, etc. and this is a normal appropriate level of work. Second, given my past experiences with online courses, downtime is dangerous: If you put more time in between assignments, even with good intentions (to give students more breathing room), it does not typically result in better work, just more procrastination. I wanted students on their toes, turning in something almost every day even if it was just a completeness-and-effort situation.

Students reported in their video reflections that although the pacing of the course was brisk (to put it politely) they liked it this way, because it made it almost impossible to procrastinate or disengage. This is the same reaction that students in a 6-week synchronous online version of this course gave. I think a lot of students benefit more from having a pacy, but structured and supported schedule in a course than they do from having lots of leniency (for example with moveable deadlines).

The use of video. You might notice that we did a lot of videos in the class --- two video reflections per week from each student to me, and four videos a week from me to the students. Despite all the constraints on the class, it did have one major advantage compared to my usual sections: The small size. With only 10 students, I could leverage that advantage by having lots of video back-and-forth. Making video isn't easy, but by keeping it simple and informal it ended up humanizing the course greatly. I have never had an easier time learning my students' names and faces. And as a result, I have to believe that having this level of personal interaction helped create a better community in the class, and thereby mitigate the risk of academic dishonesty just a little.

And as always, reattempts without penalty really helped students, despite the greatly shortened time scale in which reattempts were possible. At first I wondered if six weeks was adequate, but as noted above, when students know there are only six weeks to work with, they tend to use that time effectively because of the scarcity. There is such a thing as giving too much time for reassessment.

What didn't work as well

Respondus Lockdown Browser. As mentioned, the Checkpoints on Learning Targets were done on the LMS as online quizzes. To mitigate against cheating, I used Respondus Lockdown Browser on these. As the name suggests, Respondus is a web browser that, when opened, forces a shutdown of every program on your computer that can access the internet, even background processes. It also activates the user’s webcam and records them while taking the quiz, and flags activity that looks suspicious. I swore I would never use this tool, because I don't like creepy surveillance-based ed tech. But one of our affiliate faculty who teaches asynchronous courses frequently recommended that I give it a try. And, it was not all bad, in fact it was surprisingly mostly functional and not too Orwellian.

But in addition to having a clunky and unintuitive integration with our LMS, the tool repeatedly produced a fatal glitch: Upon opening a Checkpoint, it would tell students that they didn't have permissions to view the assignment because I (the instructor) had not configured Respondus Lockdown Browser yet (although I had) and then the only thing the student could do was exit the assessment. This didn’t happen on all Checkpoints. In fact I could never reliably replicate the issue, but instead it seemed to pop up randomly. Often the usual moves (clear your cache, quit and restart the browser, etc.) fixed it but not always. It posed an academic integrity threat of its own, because a student could wait until 11:45pm before the 11:59pm deadline, then claim to have opened the Checkpoint but not be able to take it because of the glitch, and then ask for an extension, which I could not reasonably deny. I never got this glitch resolved and it was a huge hassle.

Some assignments are still too exposed to AI risk. This journey to re-envision my Discrete Structures course started with my experiences of heavy unauthorized AI use back in the fall. Moving to an asynchronous online format, none of the measures I took to mitigate that risk (i.e. moving everything to in-class timed testing) would apply. In the end, having the five "engagement items" each week being graded on completeness and effort only, helped; as did using Respondus Lockdown Browser for Checkpoints (with the caveats above). Using frequent video to foster a sense of belonging was also helpful, I think.

But Level 2 practice and Problem Sets -- two of the main tributaries to the grade in the course -- were more or less done as always, with students working out solutions then typing up and submitting those. So these were just as exposed to the risk of unauthorized AI use as ever, if not moreso. I never investigated a student for suspected cheating on these, but my "spider sense" was going off a lot. I could have mitigated this similarly to how I did it in the Winter: By assigning the practice and problem sets for completeness and effort, then having periodic exams drawn from these. But in the asynchronous environment, a student could just have AI generated solutions on paper in front of them. And in the 6-week format there just wasn’t enough time in the calendar to allow for two more exams plus multiple retakes of these.

Student schedules. Being a 3-credit class crammed into 6 weeks, I estimated that it constituted a weekly time commitment of between 18-22 hours -- somewhere between 3 and 5 hours a day3. I stand by that estimate, but in reality, many students had nowhere near that much time to give. Some were taking three (?!) of these courses simultaneously, or working jobs at 40+ hours per week --- or both! By week 3, some of my students were visibly worn out and falling behind. This is also when my “spider sense” about AI cheating started to tingle the most.

What I think I think about courses done this way

We in higher ed are not being fully honest with students about the demands of asynchronous online courses, especially those on short time frames. Asynchronous online courses have been around for a while. They have been advertised as being perfect for “busy working adults”, because they can be done in between all of life’s other responsibilities, regardless of your schedule. But, this just isn’t so — unless you make next to no intellectual demands on students. If you have a course that challenges students to do significant learning, especially at the lower levels like my class, it is going to demand lots of time and energy, consistently given. However I am not certain if my students, when they signed up to take my class plus two others with a 40+ per week job commitment, had anybody around them telling them this, urging them either to limit their course load or dial back their work hours.

There are at least two explanations for this. One says that students oversubscribe themselves with online courses because so many asynchronous online courses are trivially easy and actually not that demanding on one’s schedule. I can believe this, because I’ve experienced it firsthand: Online courses where the only activities are watching videos and then taking multiple choice quizzes, and little to no interaction with a human. The other says that this happens because advisors and others in higher education are loath to do anything that would put a dent in the revenue stream of the institution, for example by telling students to just take one course and not three. I don’t think advisors intentionally encourage students to take more courses than they can possibly handle in order to make money, but I can believe that they might be pressured into it from higher up the org chart.

Either way we need to be honest with students on the front end that we mean business with the level of academic challenge in our courses, regardless of modality, and that 6-week asynchronous online courses are massive time commitments, and that there are only 168 hours in a week no matter how much we might wish otherwise.

Asynchronous courses, if run at all, should be smaller. I mentioned that a major advantage that my Spring class had versus other versions of the class was the small size. With just nine regular students, I could do a lot of things that are unthinkable even for a relatively small 25-30 student class, like personalized feedback videos. Many of these things strongly combat the increased risk of cheating both with and without AI. For example with nine students I could easily have done oral exams instead of Checkpoints, or I could have set up an ungrading structure where all the assignments I gave were just given feedback, and each student got a 90-minute comprehensive oral exam at the end.

At my institution the minimum enrollment in an asynchronous course that must be met for the administration to allow it to run, is 10 students, and the enrollment is capped at 20. I think this is about two times too large on both ends: The minimum enrollment should be 5 and the cap should be 12. Then you can get creative with assessment and grading in ways that mitigate the downsides of this format.

Ungrading might be the best way to run asynchronous courses. Expanding on something I mentioned above: I could have had all the assignments given no marks but only feedback, then schedule a 60-90 minute comprehensive oral exam with each student at the end, part of which would involve collaboratively determining the student’s course grade. This is essentially ungrading, and with just nine students this was definitely doable. This would be the ultimate firewall against unauthorized AI use and other forms of academic dishonesty – go ahead and do what you will during the semester, but be prepared to stand and deliver in the end. I remain as skeptical as ever about ungrading4 but I am starting to think it’s an excellent fit for courses like this one.

Would I do this again?

Well, probably not, because I don’t really want to teach in the summer again generally speaking. I didn’t want to this time, but if I’m being totally honest, I picked up the class to help pay for my daughter’s college tuition, not because I had a burning desire to teach for six more weeks.

But if I were ever in a situation where teaching in the summer made sense, I’d do a class like this again, provided that the class size was kept small enough — again, in the 5-10 student range — to allow for frequent personal interaction via video without crushing me with work. The video back-and-forth in my class was the special sauce that got the feedback loops moving and made the whole thing work, and I would want to double down on that, perhaps going as far as the ungrading solution I described.

I want to end by noting that for all of the peculiarities and challenges of the structure of this course, the specifications grading setup I used worked fine with few modifications from the face-to-face version of the course. What difficulties and hiccups I did encounter, stemmed from students having maxed-out schedules, from weird behaviors of our LMS with quizzes, and so on — not from having a non-standard grading system. I did have to make adjustments, for example to give sufficient time for reassessments and using video to kickstart feedback loops. But alt-grading remains one of the few “universal solutions” in higher education that solves a systemic problem in many contexts with a single approach, and that’s pretty cool.

In a 15-week semester, with 15 Learning Targets, I can organize the class around addressing one Learning Target per week on average. Not so here. And obviously the 12-Week Plan was a nonstarter.

Unfortunately I can't link to the Checkpoints, Concept Checks, or video reflection assignments since those live exclusively on Blackboard, and Blackboard -- being one of the worst designed pieces of software ever -- does not provide external link capabilities.

The number 2.5 is the scaling factor to keep in mind, to go between 6 weeks and 15 weeks. A three-credit course on a 15-week schedule meets 150 minutes = 2.5 hours per week, and we typically estimate 2 hours outside of class for each hour spent in class, which comes out to 5 hours per week for a total of 7.5 hours per week for the “slow” version, which times 2.5 is 18.75 hours per week for the “fast” version. And some would say the the ratio of time spend outside class to time spent in class, should be closer to 3:1 rather than 2:1.

My skepticism is summed up here. Briefly, ungrading is only as good as students’ abilities to self-assess. And when you have a bunch of students who are not well-versed in critically examining their own work — as is typically the case in this class, which is a first-year level course — then it’s unrealistic to expect good information consistently from student self-assessment. In the real world, the best way to help those students is to give clear markers as to what parts of their work are meeting the standards and what parts aren’t — in other words, to grade their work.

Interesting insights and I admire your commitment. Would you mind sharing what the final grade distribution was? I'm not only interested in the teaching aspects of it but also your assessment of the learning aspect. Also, what sort of feedback about their grades did the students give you?