Zooming in on all-or-nothing assessments

Some closely related forms of assessment and how they work

Last week, Robert proposed some “common ground” that underlies many of the assessment approaches we discuss on this blog. This week, I’m going to attempt to cut some more clarity into the jungle of alternative assessment methods.

In this post, I’ll look at three common approaches to alternative assessments: standards-based grading (SBG), specifications grading (Specs), and mastery-based testing (MBT). These are often discussed together, and the lines between them can get a bit blurry.

The common feature that links these three approaches is what I’ll call “all-or-nothing grading.” This relates to the third pillar that Robert described: “Student work doesn’t have to receive a mark, but if it does, the mark is a progress indicator and not an arbitrary number.” Each system described below focuses on essentially two marks: “Satisfactory” vs. “Not yet satisfactory”. Students can only earn credit by doing work that achieves the Satisfactory level1.

One way to think about this comes from a delightful paper that argues in favor of one approach (MBT)2. Using the analogy of learning as exploring a landscape, the authors write:

Using assessments based on accruing points treats the landscape as though it were flat and tasks students with traversing 70% (or 80% or 90%) of the terrain. Such guidance often provides a perverse incentive to approach a new mountain, march around the gentlest parts of the base, and leave it behind without experiencing its important and beautiful heights.

The goal of an all-or-nothing approach is to encourage students to dig deeply into each topic. High (but appropriate) expectations combined with helpful and actionable feedback engages the feedback loop and incentivizes students to return to previous topics until they reach a high level of proficiency. In turn, this ensures that a student’s grade reflects consistently high understanding rather than diffused and incomplete knowledge.

As Robert wrote last week, there’s a temptation to define some of these grading systems and draw clear lines between them. For many of the same reasons that Robert described, I want to avoid that here. Drawing lines leads to too much focus on whether you’re on one side of the line or another — “am I using SBG right? Or is this more like Specs?” — rather than thinking about why you’re doing it.

It’s more useful to think about what resonates with you (or doesn’t) in each of these approaches. They were each inspired by a different kind of course situation and show their strengths in different settings. We’ll take a look at how the core feedback loop works in each one, and what kind of classes or settings they are ideally suited for.

Standards-Based Grading (or Assessment)

Let’s start with one of the grandparents of alternative assessment methods, and one that is quite popular in K-12 education. Here’s a short description:

In Standards-Based Grading, all assessments are aligned with one or more fine-grained standards. Student progress is recorded for each standard separately, using a minimal rubric (e.g. “Meets standards” or “Not yet”). Final grades are based on how many (or which) standards a student has met.

In SBG, standards tend to be fine-grained, at the level of individual skills or techniques that a student could use. SBG classes usually have 20 to 30 standards, although more is possible (and harder to manage). Each assessment usually assesses students on multiple standards.

Assessments don’t earn one overall grade. Instead, student work is assessed for an appropriate level of proficiency on each standard separately. This means that the same work might meet some standards but not others, and that’s a key feature of SBG.

For example, a quiz in a Calculus class might ask students to calculate several derivatives of varying difficulty, using whatever methods are appropriate. A student could complete straightforward derivatives correctly, but struggle with more advanced calculations (like the product or quotient rules). In an SBG system, this student could earn credit for the standard “I can calculate elementary derivatives”, while earning “Not yet” on the standard “I can calculate derivatives using the product and quotient rules.”

This leads to the core feedback loop: The fine-grained standards of SBG can help tailor and focus feedback on the specific areas that a student needs to work on. Then, students can act on that knowledge by seeking out an assessment that focuses on the standards that they still need to complete. There are three main ways that SBG classes can make this happen:

New assessments: Future assessments cover some of the same standards as earlier ones. If a student isn’t satisfied with their progress on a standard (or needs to meet the standards additional times), they can attempt a later assessment that covers that same standard.

Revisions: Students can revise their work on an unsuccessful attempt and resubmit it, usually including a reflective cover sheet about what they did to improve their understanding.

Reattempts: Students can request a new problem that focuses on a specific standard, usually during office hours or a scheduled reassessment period.

There are many ways of recording multiple attempts. Most common is to keep only a student’s most recent attempt on a standard, completely erasing the marks from previous attempts. This has the virtue of showing a student’s most up-to-date level of understanding. Another common option is to keep only the highest level of progress that a student demonstrates.

Many instructors require students to meet a standard more than once to show their continued understanding over time, or in multiple contexts. For example, you could require two demonstrations, one on an in-class quiz, and one on a more in-depth out-of-class assignment. This is another way of continuing the feedback loop: Even when a student has met a standard at a satisfactory level, there’s probably still helpful feedback an instructor could give. The student has a chance to act on that feedback on a subsequent assessment.

What situations is Standards-Based Grading best suited for? SBG arose from a desire to recognize and report multiple dimensions of student understanding that can be developed independently of each other. This is often true in entry-level classes with many different topics, such as introductory algebra, biology, physics, or general chemistry. If you want to recognize student progress in some parts of their work, but encourage them to review other parts, SBG shines at this. SBG works with almost any type of assessment, including quizzes, exams, traditional homework, lab reports, and projects.

Specifications grading

Next we’ll look at Specifications grading:

In specifications grading, each assessment has a clear list of specifications that describe what constitutes satisfactory work on that assessment. Each submission earns a single overall mark based on whether it meets the assignment’s specifications consistently (“Satisfactory” or “Not yet”). Final grades are based on students satisfactorily completing related bundles of assessments.

Specifications grading comes from Linda Nilson’s book “Specifications grading: Restoring Rigor, Motivating Students, and Saving Faculty Time”.

The “standards” in Specifications grading can look quite different from SBG. Each assignment includes a comprehensive description of what a successful submission must include. These specifications can be as broad or specific as you’d like. For example: specific content requirements, methods to use, topics to address, learning objectives, writing style, length, and anything else that matters.

The key feature of Specifications grading is the holistic focus on an entire assignment. An instructor’s key decision is, “Does this entire submission satisfactorily meet the specifications, or not?” In contrast to SBG, this encourages students to create a well-rounded submission that addresses all aspects of the assignment.

As a result, the core feedback loop in Specifications grading is grounded in revision rather than attempting new work. Nilson recommends a token system: Students have (or can earn) a limited number of tokens, which can be spent to permit revision of an assignment, usually including a reflection on what changed and what the student learned throughout the process. The instructor reviews the revised submission and decides if it meets the specifications. The mark on a revised submission completely overwrites any earlier mark. Tokens can serve other purposes as well, such as delaying deadlines.

This revision-based feedback loop can cover a broad range of aspects. Instructors can give feedback on an entire submission and its overall strengths and weaknesses, and ask students to address these in a full revision. But in the same way, the nature of specifications lets you get into nitty-gritty details if you need. For example, “Your example is clearly described and your illustrations support your writing well. However, you haven’t clearly connected your proposed definition to your answer to the question: Why should ravioli be considered a sandwich?”

What situations is Specifications Grading best suited for? Specifications are great at describing processes, activities, and forms of communication. Because of its focus on an assignment as a single unit, this system insists that students create a cohesive whole that shows their ability to put together multiple ideas. Common types of assignments in Specifications grading are essays, proofs, projects, and portfolios.

Mastery-Based Testing

Our last alternative assessment approach in this post is Mastery-based testing:

Mastery-Based Testing focuses only on exams or quizzes. Classes include a list of broad objectives, usually at the level of textbook sections. Each exam or quiz consists of one page per objective covered so far (where a page may contain one or more related problems), and each exam or quiz includes new attempts at previous objectives. Students earn progress on each objective separately. A student’s exam grade is calculated as the percentage of objectives they have met.

MBT’s objectives are somewhere between SBG’s fine-grained standards and Specifications grading’s assignment-wide specifications. The objectives here cover broad topics, usually described as “types of problems that a student should be able to complete successfully in this class.” These might correspond to sections in a textbook, such as “Convert between names and formulas of molecular compounds” or “Solve global optimization problems.” MBT classes usually have fewer objectives than SBG, around 10 to 20 at most.

Because each page on an MBT exam covers a single objective (possibly though multiple problems), the entire objective earns a single mark for the overall level of work. This leads to a more holistic sort of assessment, similar to Specifications grading.

A key feature of MBT is that students have built-in and highly structured opportunities to reattempt objectives. The feedback loop here is based on the regular timing of quizzes or exams. Students have time between assessments to review a topic, and then demonstrate their understanding on a new problem when the next assessment happens. An example: The first exam might cover four objectives — one page of problems per objective. The second exam would then cover four new objectives, but also contain four pages of problems, one for each of the old objectives. Students can attempt as many of those pages as they want or need to do.

Instructors may offer other ways to reattempt an objective, such as scheduling a new attempt during office hours, or on a dedicated “reassessment day” that happens between exams.

Because MBT focuses only on tests or quizzes, students typically need to meet an objective only once. To avoid the issue of students completing an objective early in the semester and then forgetting it, some instructors identify a small list of core objectives that must be “re-certified” on a final exam, ensuring that feedback even on completed objectives is still valuable.

What situations is Mastery-Based Testing best suited for? MBT has the great virtue that it can be added in to almost any existing class. Only the tests or quizzes need to be changed, and the MBT system produces a percentage that can be used in a traditional weighted-average grading system. Students may see less benefit compared to a whole-class conversion of the assessment system. However, this is also more accessible to instructors who are required to have certain grade weights, who teach in tightly coordinated sections, or who simply want to dip their toe into the alternative assessment world without completely changing their class.

That said, MBT works best in classes that have a well-defined, but not long, list of “topics” that can be used as objectives.

Putting it all together

I want to reiterate a key message from our last two posts: Don’t get too caught up in definitions and whether you’re doing SBG or MBT or Specs or whatever. These ideas are each valuable and work well in many places.

Nearly everyone who dips their toes into alternative assessments eventually creates a system that is a hybrid of several approaches, with unique elements as well. You can pick and choose elements that suit your class, your philosophy, and your situation, drawing inspiration from all of the ideas above.

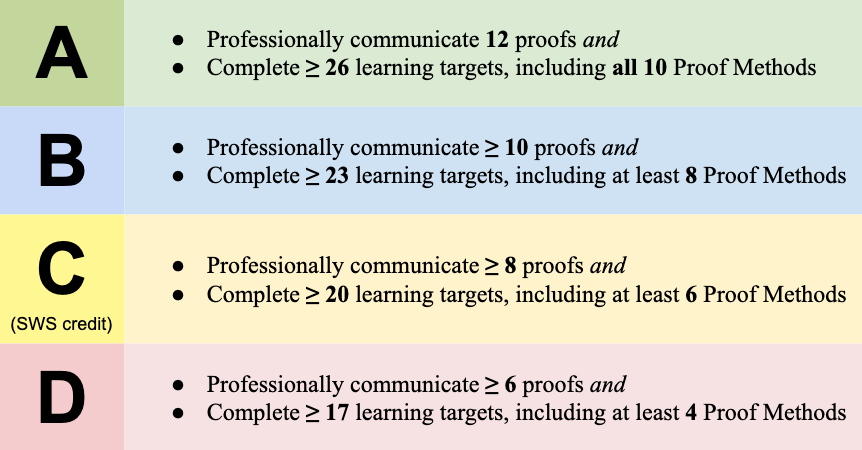

For example, I often use a mastery-based testing setup for quizzes that cover lower levels of Bloom’s taxonomy, but I also use Specifications grading for papers or projects that address higher Bloom levels. How do these combine into a final grade? There are many ways, but the most common is to create a “bundle table” that specifies what must be done for each grade. You can see an example of that in this snippet from one of my Fall 2021 syllabi. There are learning targets coming from an SBG-like system, but also “Professional communication” coming from a portfolio of proofs (mathematical writing).

Whatever you do, I hope you have a better sense of some of the common approaches that are out there.

Click here to receive Grading for Growth in your inbox, every Monday.

Many people still use a multi-level rubric, such as the EMRF rubric, with these systems. However, the different levels still divide into just two categories: Those that are satisfactory (usually E and M) and those that aren’t. Within a category, they don’t get averaged or otherwise count differently. The differences in the levels of rubric are all about the feedback that they provide, usually in actionable terms (for example, “R” describes the work as “revisable”).

James B. Collins, Amanda H. Ramsay, Jarod Hart, Katie A. Haymaker, Alyssa M. Hoofnagle, Mike Janssen, Jessica S. Kelly, Austin T. Mohr & Jessica OShaughnessy (2019): Mastery-Based Testing in Undergraduate Mathematics Courses, PRIMUS, 29:5, 441-460. Available at: https://doi.org/10.1080/10511970.2018.1488317.