David's New Year Resolutions

How will I change my approach to alternative grading in 2023?

Welcome to 2023! To start the year, Robert and I will be posting our new year resolutions for alternative grading: Things we’re planning to do or change about our grading in 2023.

This week, I’m bringing you two of my new year’s resolutions. To give a concrete example of my own reflective process in action, I’ll show how I’m putting these resolutions into practice in two classes I’m teaching this semester. As you read this, it’s our first day of classes here at Grand Valley State University, so these resolutions are already in action. Watch for updates throughout the semester too.

Simplify, simplify

Resolution: Simplify my hybrid grading system to make it easier to understand and use.

If you’ve been reading our blog for a while, you know that we constantly say: Keep it simple! A simple grading system is easier for everyone to understand, students and instructors both. Simpler systems get out of the way and let students focus more on learning and growth. This is good advice, and it’s also advice that I need to work on following.

This semester, I’m teaching MTH 210: “Communicating in Mathematics” for the nth time, where n is a rather large number. This “introduction to proofs” class is a bridge into upper level mathematics, where the emphasis is on logical arguments, aka proofs. So MTH 210 is focused heavily not just on learning new mathematical ideas, but on writing and communicating arguments that use those ideas. In fact, we focus so much on communication that this class satisfies my university’s General Education requirement for writing-intensive courses.

For many years, I’ve used a hybrid of Standards-Based Grading and Specifications Grading in MTH 210. Over time, I’ve realized that this system is too complex, and it’s time to simplify it. Here’s a quick outline of how it has worked in the past.

The standards are used when assessing specific mathematical content. Here are a few examples of standards I’ve used:

P.3: Write a correct proof by contradiction.

S.1: Create sets using set builder notation and translate sets into this notation.

L.4: State logical equivalences and prove them using a truth table.

On the other hand, specifications are used when assessing writing. When grading writing, I decide if it has met these specifications holistically (there are more, this is just a snippet):

Include a correctly worded theorem statement.

State all assumptions clearly and concretely, and state what will be proved.

Define all variables before they are used.

Use appropriate symbols in formulas, and avoid excessive symbols otherwise.

I also have a document that details the meaning of each of these specifications, with examples, and we also discuss and practice with them often in class.

Mathematical proofs intertwine these two ideas. Proofs are fundamentally about mathematical ideas, and so I assess proofs for meeting mathematical content standards. But proofs are also cohesive pieces of writing that try to make a unified, logically sound argument – which I assess holistically using specifications. The interaction between standards and specifications in the same piece of writing gets tricky: Is this error a communication issue (specifications)? Does it reveal a problem with mathematical content knowledge (standards)? If so, which standard? Proofs bring multiple ideas together, making it hard to pull apart exactly which standard is met or not. If part of a proof is confusing, is that a writing issue, does it reveal an underlying logic problem, or both?

Students often found all of this confusing: Their “grades” for a proof involved both a list of marks on standards, plus a single mark for holistically meeting the specifications, even though the specifications themselves are also a list that sure looks a lot like the list of standards.

So this semester, I’m simplifying while also playing to the strengths of each grading style. Here’s my plan:

Standards are best suited for assessing discrete items that can be judged separately. So I’ll only use standards on weekly quizzes, which will include fairly direct, low-Blooms-level questions about recent mathematical topics. Their goal is to assure that students have a basic understanding of each topic.

Specifications are holistic: They’re best for longer pieces of writing that focus on an integrated understanding. That’s exactly what proofs are, so I’ll use specs – and only specs – on written proofs, which incorporate high levels in Bloom’s taxonomy. One of my specifications will be “Have no important errors or omissions in mathematical reasoning or justification”, and I’ll give feedback that’s phrased in terms of relevant standards – but there won’t be any grades for those standards on the proofs, just an indicator of whether the student has meet the specifications or not.

This clear division of grading systems is a lot simpler, and it has another side-benefit of simplifying my list of standards. I used to require students to meet most standards twice, once on a proof and once on a quiz. But the requirement wasn’t consistent: Some standards applied mostly to proofs, or mostly to quizzes, so I had slightly different requirements for each. A few standards felt more (or less) important, and had to be “checked off” more (or fewer) times compared to the others. All together this made for a snarled mess where students had to pay close attention to the logistics, which distracted from their learning.

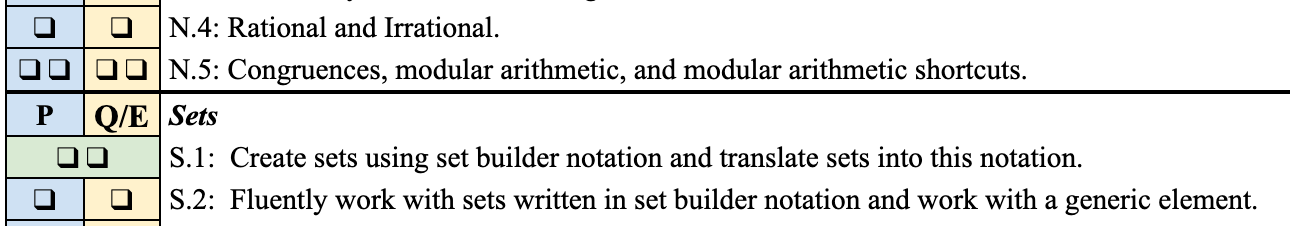

Here’s a snippet from a past list of standards, showing the variety and complexity of requirements (the left column of checkboxes labeled “P” was for meeting the standard on Proofs, the right column “Q/E” was for Quizzes or Exams, but S.1 needed two checks anywhere, and N.5 needed two checks in each category… sigh):

By simplifying and streamlining my grading system, I was also able to simplify my list of standards considerably. I eliminated standards that only apply to proofs – I just integrated those into the specifications instead – leaving a smaller and more streamlined list of standards for quizzes. I went from 29 standards to 18!

Here’s how that same list of standards looks now:

Much simpler, right? By the way, “core” standards are ones that need to be re-done on the final exam in the style of Joshua Bowman and Hubert Muchalski.

Overall, I’m much happier with this simplified and streamlined assessment approach. It feels less like trying to shoehorn square pegs into round holes, and more like using grading systems to support student learning.

Focus on growth

Resolution: Value and encourage growth for its own sake, in addition to specific achievements.

This blog is called Grading for Growth, and undoubtedly one of the greatest strengths of the Four Pillars model is that it gives students room to grow without penalty.

But there’s also a common issue that comes up: If I’m grading student work using clear criteria (e.g. standards or specifications), then ultimately I care about students meeting those criteria. That’s true regardless of whether meeting the criteria required a lot of work, or if it was easy for the student. A student who makes huge gains in understanding, but not enough to meet a standard, still earns a mark of “not yet”.

This isn’t necessarily a bad thing: After all, one of the biggest strengths of alternative grading is the clear link between course grades and achievement of the course’s goals. Plus, reassessments without penalty allow students to go back, learn, grow, and hopefully meet the criteria on a future attempt.

But I also believe that growth itself is a valuable goal, in addition to any specific course content. But that goal isn’t explicitly reflected in grades.

A good example of this is the Euclidean Geometry course that I’ll be teaching this semester. I’ve taught Euclidean Geometry many times before, most recently in a fully ungraded format. It’s a course for future teachers, and in some sense, there’s no new course content at all: We review and extend geometric topics that students have already learned through elementary, middle, and high school (and will likely teach in those same grades).

But in another sense, the course content is all about growth: We spend most of class learning why these things – which they already “know” – are actually true, with students learning to create and present explanations much as teachers do every day. This is often a revelation, helping move students from “it’s a fact, I know it’s true” to a more creative, inquisitive mindset. We build connections between geometric ideas, and – most difficult of all – discover that there isn’t just one “correct” way to do geometry, or even math. Rather, there’s a wealth of logically sound and perfectly valid mathematical worlds that we can choose between depending on the context. This last idea challenges some pretty fundamental beliefs that many pre-service math teachers hold, and it can be both difficult and exhilarating for students to face these issues head on.

All of this is to say that what I care most about in Euclidean Geometry is how a student grows as a mathematician and as a future teacher. But in the past, I’ve focused mostly on the easier-to-assess aspects of geometry, that is, the specific geometric content. That was a proxy for the type of growth I wanted to see most. Even with ungrading, the narrative descriptions I developed for each grade referred mostly to how well students understood the underlying geometric ideas, even for the highest grades.

So this year, I’m taking a big leap to explicitly focus the course on growth. How? I’ll still use an ungraded approach, in which I collaborate with students to discuss their progress, and students construct a final portfolio that demonstrates how they’ve earned a certain grade. It’s the criteria for those grades that will change significantly. Here’s my overall plan:

To earn a C or higher, students must do two things: Show that they’ve understood many fundamental geometric concepts (from a list I provide), and also been a “good class citizen” (e.g. take an active part in discussions, do prep work, etc.).

To earn a B or higher, students must consistently share their ideas with the class, either through daily presentations, or through a written option I call a “class journal”. This centers the importance of developing skillful communication in future teachers.

To earn an A, students must choose an individual “direction for growth” and, by the end of the semester, demonstrate significant improvement in that area. I’m planning to provide two options: growing towards excellent mathematical writing skills, or growing towards excellent presentation skills. Students will pick one of these and develop a specific plan to achieve it with my guidance. I’ll tailor my feedback and support (and some assignments) towards their area of growth, and we’ll discuss their progress and make adjustments during each check-in meeting. In the final portfolio, students will show how they’ve improved and followed their tailored plan over the course of the semester.

The grades build on each other, so (for example) to earn a B, a student must do everything for both a C and a B. To earn an A, a student must show how they’ve accomplished everything in this list.

I’m only offering two options for the “direction for growth” in my first attempt, but in the future I’m hoping to let students identify their own, fully customized, direction for growth. This first time, I’m keeping it simple until I’m a bit more comfortable with the details of this approach.

I can imagine objections and concerns about this approach to grading for growth. For example, this doesn’t mean that I’m giving up on assessing geometric content. Quite the contrary, in fact: I’m ensuring that geometric content knowledge is essential for passing the class with a C. But to do better than passing, students must demonstrate not just knowledge, but growth.

A likely concern in a different direction: Won’t students just pick a “direction for growth” that they’re already strong in? That’s why, in their final portfolio, students must actually show improvement in their chosen area over time, not merely meeting any specific standard. Might students deliberately hide a strength, so that they merely appear to grow? I seriously doubt it: This is a highly interactive and collaborative class. I get to know students extremely well through close collaboration, and I doubt that a deliberate attempt to hide their abilities would work.

But more than any of this, I really do intend to start by trusting my students: In my experience, students genuinely want to grow and learn, and if given the opportunity and a safe place to try, fail, and try again, they’ll do so. My biggest change here is making it explicit that I value that process of growth.

Both of my resolutions have resulted in some big changes to classes that I’ve taught many times. That’s always a tough thing to do – it’s just so much easier to keep doing what I’ve done before – but I know from experience that it’s worth the struggle. I want to keep growing and learning, just like my students.

One suggestion for the 'growth' part of the grade: The final step in the trust process could be to turn this part of your assessment over to the students. I tried that last year for the first time because I wanted a PreCalc upgrading course to be about more than mastering the content.

When adult students come back to school (in this case for math upgrading) they tend to fall back on their high school ways. I wanted my group to become more positively and effectively self-managing -- to evolve new adult student success personas they could carry forward into whatever future post-sec programs they entered. For everyone this turned out to have to do with striking a successful work-life balance, with seeking effective help, with figuring out what they had to do, when, how much, and why to meet their goals. The one thing I insisted on was that this portion of the grade not be averaged. Their course-end self assessment would be the mark for that portion of their grade (and I gave it 20%). I didn't want early errors in judgement to be held against them, as it's impossible to figure out how to become a more effective learner without making mistakes and figuring out how to do better.

We began by collaboratively developing a self-assessment rubric which became a sort of generalized guide. My vision wasn't as clear as yours, but what I discovered was that 'growth' is measured very personally. Through regular 1-1 meetings, each student and I adapted and readapted that first rubric to reflect his/her needs and wants at the time and to decide what kind of evidence would be shared by each student to support his/her final self-evaluation. Each student's final version of the rubric met his/her unique needs and wants.

Although some students who were extremely anxious about final marks initially saw this as a way to pad their grades, by the end everyone was able to look back and see that the work they'd done had far exceeded the expectations reflected in our first class rubric -- no padding was needed! (Of course, I also structured the biggest part of the rest of their assessment so growth was unavoidable if they were going to get grades that would open the next doors they knocked on -- which helped a lot!) As the term went on, most students upped the ante on themselves and their evolving rubrics reflected that. Once the students came to trust the process, they were able to experience the joy of becoming self-directed adult learners -- even in math!

One of the biggest things I've learned in my past year of experimenting with alternative grading is that different grading systems make sense for different course content. You do a good job in this article of identifying two different systems for your different classes. But I don't know if this or other articles explicitly suggest not trying to cram all classes into one framework. So, that's my small suggestion for a message to share that might save future headaches! Happy new year!