Case Study: Hubert Muchalski's hybrid assessment system for Organic Chemistry

Today, we bring you another case study from our upcoming book, “Grading for Growth.” Today’s case study is a hybrid system that combines elements of standards-based grading, specifications grading, and other ideas that support the four pillars of assessment.

Most alternative assessment systems end up being “hybrid” as instructors combine elements to find what works best for them, their students, and their classes. There’s not just one “right” way to do things!

We would love to hear your feedback on this case study. Do you find it helpful? Is there something more you’d like to know? Are there unnecessary extras we added in? Let us know in the comments!

Hubert Muchalski1 is a tenured professor in the department of Chemistry and Biochemistry at California State University-Fresno, a primarily undergraduate institution in the CSU system. His Organic Chemistry 2 course is taken both by chemistry and biochemistry students, as well as students in pre-health majors. He typically has 40-50 students in his classes. In its most recent iteration, Muchalski taught Organic Chemistry 2 in an online synchronous format, leading live Zoom sessions. His assessments were completed offline, not during class time.

As Muchalski says, “Organic chemistry is modular. We begin by learning simple low-level skills which get assembled into high-level skills.” His assessment system helps students understand where they are in terms of assembling these skills. To ensure that students see the big picture as well as the details, Muchalski uses a combination of standards and specifications on different types of assessments.

Students are tested on direct skill standards on “checkpoint” quizzes. Synthesis and cross-cutting skills are assessed on “Application/Extension Problems” (AEPs) that represent more challenging and integrated work at the high end of Bloom’s taxonomy, and are graded using specifications. To show their understanding of a key overarching concept, students construct a “Mechanism portfolio” throughout the semester that is also graded using simple specifications. Muchalski’s class includes a final exam that is required by his department – it uses multiple-choice questions and is graded with points.

The key discrete skills are represented by 15 standards, 6 of which are “core”. These standards are assessed on “checkpoints,” which are essentially quizzes with one question per standard.

The checkpoint standards are relatively broad: Each chapter in the class’s textbook is assessed by about 1-2 standards. Muchalski describes each standard as being assessed by one “multi-layered problem” per checkpoint. Here are some examples of these standards:

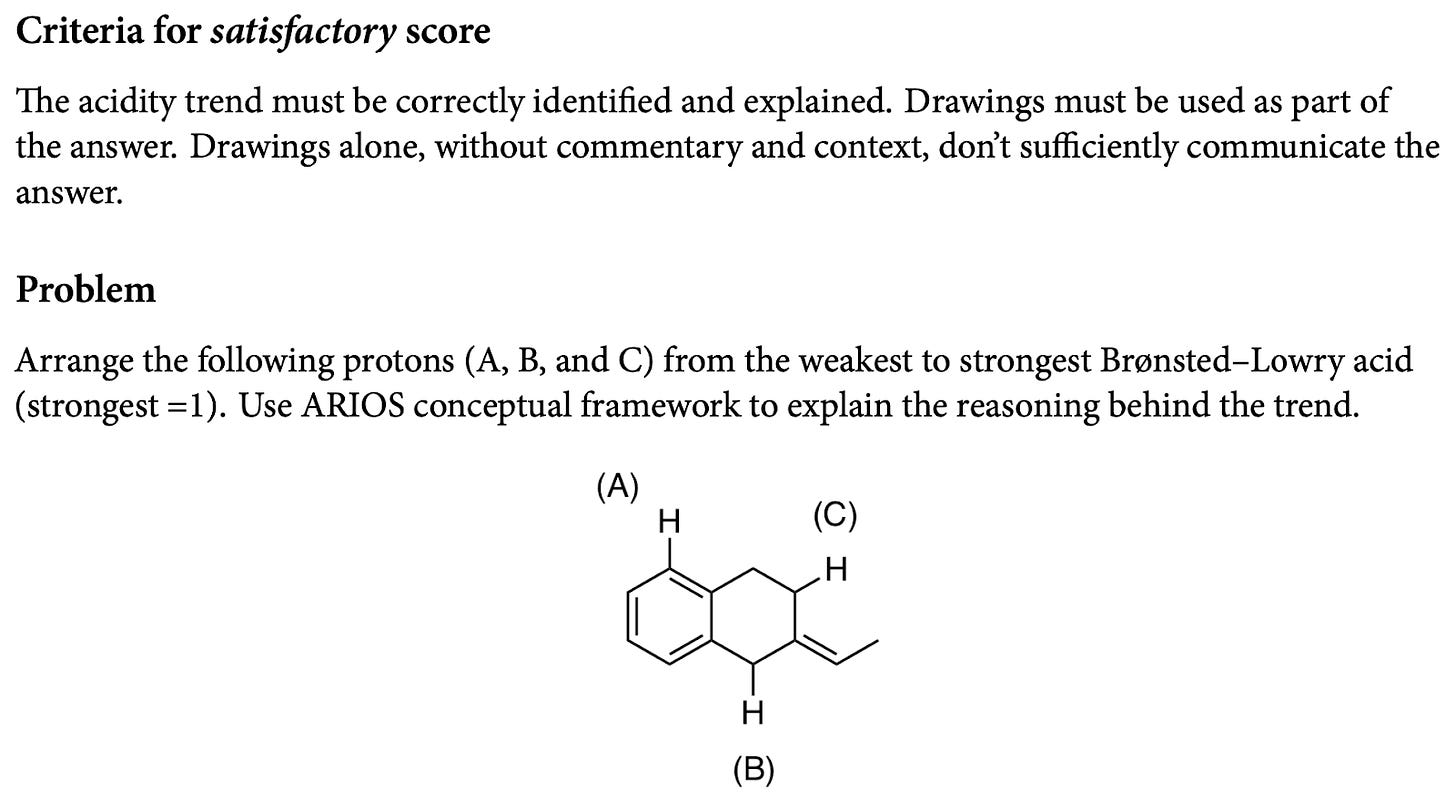

(CORE) I can use the Brønsted-Lowry theory to explain pKa trends of organic acids and correlations between structure and thermodynamic stability of organic bases.

(CORE) I can use curved arrow notation to draw mechanisms and explain reaction pathways involving aromatic compounds.

I can solve synthesis problems involving conjugated pi systems.

I can use retrosynthesis method and solve synthesis problems involving functional group interconversions and aromatic substitution reactions.

On a checkpoint, each standard is marked “Pass” or “No pass.” Students must pass each standard twice during the course of the semester to earn credit. Earning credit on all core standards is a requirement to earn a B or higher. As is commonly done when assessing discrete skills, standards can be reassessed by attempting a new problem on a subsequent checkpoint. Checkpoints are cumulative, offering new attempts at all previous standards.

Muchalski notes that he used to ask multiple questions per standard, with “Pass” requiring (for example) 3 of 4 or 4 of 5 questions to be completed correctly. Moving to a single, carefully chosen question but requiring each standard to be completed twice is one of his biggest improvements:

It was difficult to write quizzes that contain a balanced set of questions. For example, in a 4-question quiz, one of them was asking students to write the mechanism. But, if they nailed the first three questions and had no clue about mechanism, they could get an A in the course and have zero clue how to write a sensible mechanism (disqualifying for A in my view). By asking students to answer a single problem two times, I eliminated “one and done” lucky passes. Students didn’t move to more challenging parts of the course with poor understanding of fundamentals.

Checkpoint quizzes are given asynchronously: Muchalski designates a class day to be a “checkpoint day” and doesn’t hold any regular class session that day. Students can work on the checkpoint questions during any 3 hour window within the 24 hour day, with no need for invasive remote proctoring. The 3 hour window is enforced by the course’s learning management system. To begin, students submit a “dummy assignment” on the LMS that asks them to agree to an honor pledge (for example, “By submitting my work, I certify that I have only used permitted resources as described in the instructions”) and asks if they want to use a token to extend the window by one extra hour. Once submitted, the LMS makes a PDF of checkpoint questions available and begins a timer. Muchalski writes several different versions of each question, so students don’t all see the exact same set of questions in the PDF. Students use phones or a scanner to upload scanned versions of their work to the LMS.

Muchalski reports virtually no academic dishonesty with this system. Having multiple versions of problems helps with this. But more importantly, the reduced stress of this sort of assessment also reduces the incentive to use improper resources. When in doubt, he can ask a student to come to an office hour and rework a problem “live.”

Here is an example of a checkpoint problem for the standard “(CORE) I can use the Brønsted-Lowry theory to explain pKa trends of organic acids and correlations between structure and thermodynamic stability of organic bases.” In particular, notice the specifications for “Pass” that are listed above the question:

To assess synthesis and cross-cutting ideas, Muchalski assigns Application/Extension Problems (or AEPs). These are specifications-graded assignments, somewhat like mini-projects. As Muchalski says, “The goal is to stretch students' understanding and ask them to use skills that cannot be siloed into a single chapter. Chemistry is a central science with plenty of connections to physics, biology, medicine.” The AEPs assess at higher levels of Bloom’s taxonomy. Muchalski posts a new AEP available periodically, related to recent topics. Each AEP remains available for several weeks, and typically several AEPs are available at the same time. Students can choose to complete any AEPs that they want–none are required, since these are optional ways to earn a higher grade. Students can submit one new AEP, or a revision of a previous one, each week.

Here is the introduction and specifications page from one AEP:

The AEP question itself:

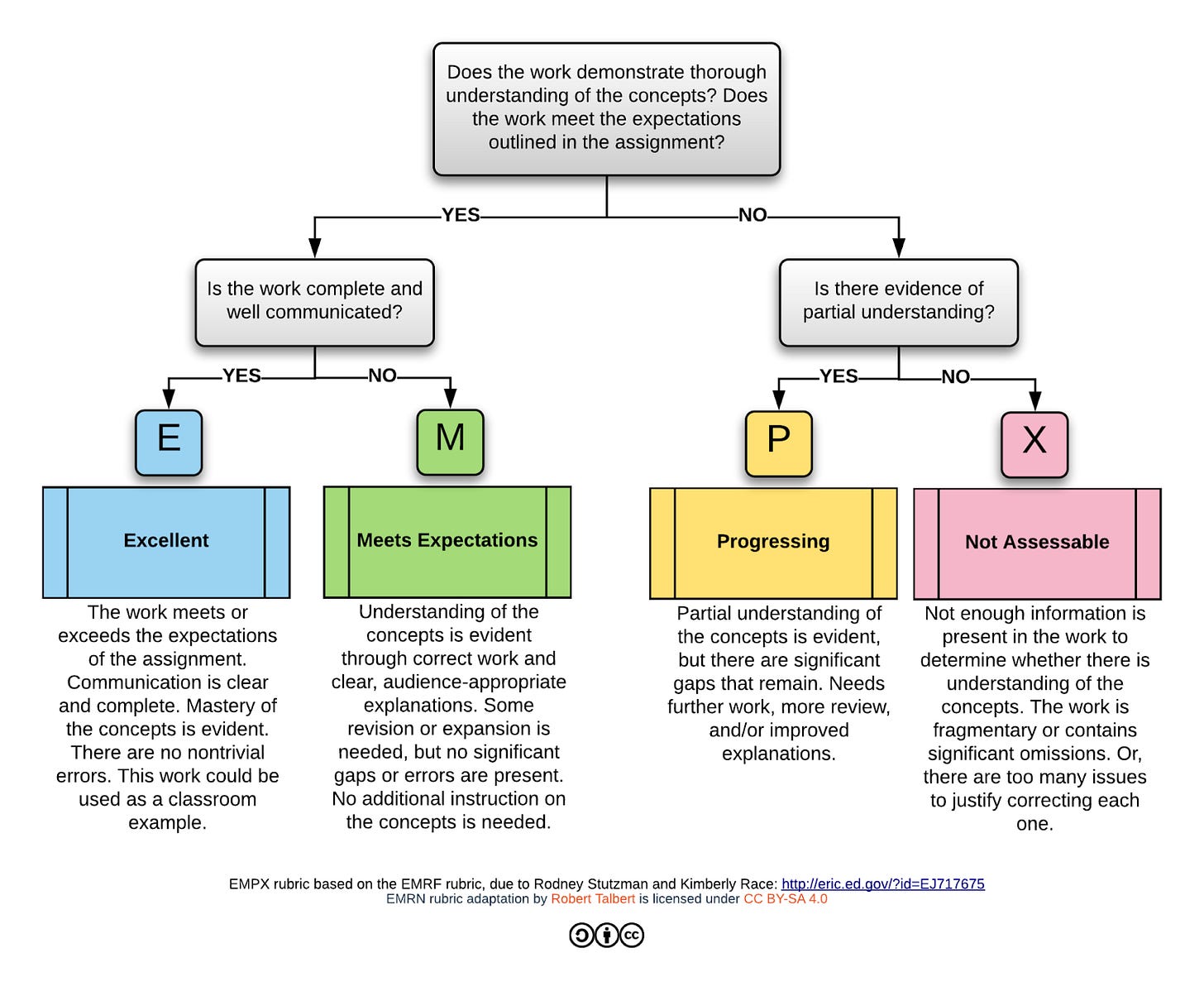

The specifications for AEPs include both organic chemistry content, but also clear communication. These specifications are presented as part of a common four-level rubric, the EMPX rubric: Excellent, Meets Expectations, Progressing, or Not Assessable. This in turn is a variation on the EMRF rubric (Excellent, Meets Expectations, Revisable, Fragmentary2) first described by Stutzman and Race.

The EMPX rubric can be used to assess assignments for both correctness and overall communication quality. We illustrate it using a flow chart, which also helps students understand the instructor’s grading decisions:

Each AEP assignment earns one mark for the overall level of work. Each individual AEP assignment has specific instructions and requirements, and so “The work demonstrates thorough understanding of the concepts” and “the work meets the expectations outlined in the assignment” refer to assignment-specific requirements.

Notice how the EMPX specifications combine both content knowledge and communication. While both “Excellent” and “Meets Expectations” earn credit, “Excellent” shows a higher level of communication. These detailed assignments benefit most from revision rather than attempting new problems. Students can revise one AEP solution each week, and Muchalski grades and returns it with detailed feedback. However, AEPs that earn an X–indicating severely incomplete or fragmentary work–can only be revised if the student spends a token, described below.

Since these are significant assignments with a high standard, students may need to revise multiple times. Reflecting this, final grade requirements include only a few AEPs, and only an “A” grade requires an Excellent AEP mark.

Another graded assessment in Muchalski’s class is a Mechanism Portfolio. This portfolio demonstrates an especially useful type of assessment: A collection of work, assembled over time, that gives students a chance to show learning and growth in topics that last throughout the semester. In particular, the Mechanism Portfolio allows students to demonstrate their understanding of processes that explain certain chemical reactions, which are cross-cutting ideas in organic chemistry. As Muchalski says:

In organic chemistry we “tell stories” how chemical reactions occur. A reaction is a series of bond-breaking and bond-forming events that can be communicated using mechanisms. Mechanisms also help explain unexpected reaction outcomes. [...] Many students find it easy to memorize reactions, before and after (reactant → product), but could not explain how it works.

The Mechanism Portfolio is a collection of explanations of reactions that use specific mechanisms. Typically, mechanisms are first discussed during class, in the context of other topics. Students are given weekly assignments to review a mechanism from the textbook, and then apply it in several new situations. The resulting explanation must be error-free. Students submit these explanations, which are graded Pass/Fail based on full correctness. These become the “portfolio,” and the number of fully correct items included in it is a part of the final grade requirements. Rather than revisions or reassessments, students have the opportunity to submit drafts for feedback before each portfolio mechanism is due.

Muchalski finds that these portfolio assignments are helpful in many other parts of the class, since mechanisms are so central to Organic Chemistry. He says that “the assignment is equivalent to ‘doing the reps’” as you might in a gym. He believes that the practice of rereading the textbook and practicing with mechanisms helps students on checkpoints in particular, which often use mechanisms as part of the solutions. Muchalski’s portfolio problems are, effectively, a form of spaced study.

There are many ways to use portfolios: Another typical use of a portfolio that you might find in other classes includes assembling work that shows a student’s growth in each key area of the class (or standard), to be submitted along with reflections at the end of the semester.

The final exam is multiple-choice, provided by the American Chemical Society, a professional society for chemists. This exam is common across not just sections of this course, but across Organic Chemistry courses at multiple institutions. How is this included in an otherwise alternatively assessed class? The score on the final exam is simply another requirement in the final grade table. In the grade table below, notice that the requirements for the final exam are fairly light: It is the only traditional high-stakes exam in the class, in an unfamiliar format, and with no opportunity for reassessment. This is a common approach to a required common final, especially one that uses points. Another option, for instructors who have the flexibility, is to use the final exam to add a “+” or “-” onto a student’s grade.

In addition to everything mentioned, Muchalski’s class is flipped, so he uses the online tool Perusall to help students annotate and discuss pre-class readings. This is recorded only for completion and effort.

Here is how final grades are determined:

As usual, an “F” is earned by not fully completing the requirements for any other letter grade. There are no “half” or partial grades at Fresno State, so these are all possible grades.

To provide flexibility, Muchalski uses a “token” system. Students begin the semester with 5 tokens, and their current total is recorded in the LMS gradebook. One token can be used at any time to submit a second AEP revision in a given week, revise an AEP that earned an “X,” or extend a Checkpoint deadline by 1 hour. These tokens require no permission and give students flexibility when they need it.

Muchalski has seen some major benefits from using this “hybrid” system. Students report less stress around assignments in general. As Muchalski says, “Some don’t believe me that they can retake a quiz.” The revision and reattempt system, as well as the AEPs, ensure that students re-engage with earlier material that they haven’t fully understood, rather than leaving it behind. (Think about how different that is from the usual “one and done” approach that traditional exams encourage!) All of this means that students report thinking deeply about chemistry in a way that they haven’t done before. Plus, a student nominated Muchalski for a teaching award based on his assessment system–and he won it!

Muchalski’s system shows how standards, specifications, and other items (such as the portfolio) can be used together to support different levels of learning. Standards support practicing discrete skills, while specifications encourage synthesis, process, and “putting it all together.” Muchalski’s mechanism portfolio encourages spaced practice, and he incorporates a required points-based final exam too.

I want to reiterate what I wrote at the top: There isn’t just one “right” way to use alternative assessments! While we’ve previously seen “pure” examples of Standards-Based Grading and Specifications Grading, many alternative assessment systems incorporate elements of each, as well as things unique to each instructor that suit their particular situation.

On this blog and in our upcoming book, we’re hoping to help readers understand the range of what can be done with alternative assessments—both how and why instructors make their choices. What works for us may not work for you. Keeping in mind some basic principles (like our four pillars of assessment), and with lots of advice and examples, you’ll be able to find your own mix that fits within our big alternative assessment tent.

Thanks for reading this case study! Do you find it helpful? Was there something you especially liked? What would you add or remove? Let us know in the comments!

Hubert Muchalski would like you to know that his system is inspired by Robert Talbert’s alternative assessment system for his Discrete Mathematics class. We think that Muchalski has made some helpful additions and revisions that make the system his own, and does an excellent job of sharing his reasoning for using this system. (Ed.: Seconded. I took notes. —RT.)

Many variations of this rubric are used in alternative assessments, all with the same fundamental structure of two passing and two not-passing marks (typically the not-passing marks count equally, and just indicate relative levels of progress towards passing). One common concern is that the “F” (Fragmentary) in EMRF looks too much like a traditional “F” (Failing) grade, which has led to EMRN (“N” = “Not assessable”) and EMPX.